In some previous posts, I talked about the benefits of doing backups to Oracle's cloud...if you run the numbers it will be significantly (magnitudes) less expensive than backups on prem or to other cloud vendors. Its safer than keeping backups geographically close to you, they're very fast (depending on your WAN speed, the storage array's write speed will be the bottleneck for your restore), they're encrypted, compressed and secured. Once you have everything scripted...its a "set it and forget it." The uptime far exceeds typical on-site backup MML systems...which is important because failing to do timely archivelog backups may translate into a database outage.

I've really only had 1 complaint until now. Here's the full story...skip down to "The Solution" if you're impatient.

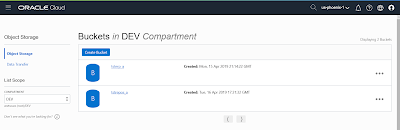

When I first helped a customer set up their backups to Oracle's Classic backup service (pre-OCI), there was a problem. The design called for the database backups to go into a different bucket for each databases. When the non-prod databases were refreshed from production, they wanted to delete the bucket that held those backups. No problem...there's a big red delete button on the bucket page, right? Wrong! You can't delete a bucket that has files in it. When you do a backup of even a medium sized database, you can have hundreds of thousands of files in it. The only way to delete files on the console is to select 100 at a time and click delete. I had 10 databases/buckets, over a million files and it took me about 5 seconds to delete 100 files. If I did nothing else...we're talking ~14 hrs of work. In reality it was going to take somebody days to delete the backups after every refresh. Completely unacceptable....

I opened an SR to ask for a better way. They pointed me to Cloudberry Explorer (which is a pretty cool product.) CBE allows you do pull up the files in Oracle's (or virtually any other) cloud in an interface similar to Window's file explorer. Great! I set it up, selected all the files I wanted to delete and clicked the little delete button. After the files were indexed, it kicked off multiple threads to get this done. 3 by 3 (about 3 sec per file), they started to delete. I left my laptop to it over the weekend. When I came back on Mon...it was still deleting them.

I updated the SR...they pointed me to using curl in batch to hit the API (Doc ID 2289491.1). Cool! I love linux scripting...it took a while to set it up, but eventually I got it to work...and one by one (about 3 sec per file) they started to delete. Argh.

A colleague I work with wrote a similar procedure in perl...but it was using the same API, and had the same results.

I updated the SR...they essentially said there's no way to do what I'm trying to do any faster.

I opened an new SR for an enhancement request to add a way to do mass deletes...here's the response:

"The expectation to delete the bucket directly is like a Self Destructive program ,Many customer will accidentally delete the container and will come back to US for recovery which is not possible."

...and then they closed my enhancement request.

My first thought was to send an email to Linus Torvalds and let him know about the terrible mistake he made introducing rm to his OS. (sarcasm) My second thought was that the company that charges for my TB's of storage usage isn't motivated to help me delete the storage.

The Solution: Lifecycle the files out.

1. First enable Service Permissions (click here).

2. In OCI, go to Object Storage, click on the bucket you want to clean up and click on "Lifecycle Policy Rules." Click on "Create Rule."

3. Put in anything for name (its going to be deleted in a minute, so this doesn't matter.) Select "Delete" from the lifecycle action and set the number of days to 1. Leave the state at the default Enabled, then click the Create button. This will immediately start deleting all the files in the bucket that are over 1 day old...in my case, that was all of them.

4. The Approximate Count for the bucket will update in a few minutes. Soon (within a min or so) it will say 0 objects. Now when you click the red Delete button to remove the bucket, it will go away. I was able to remove about 150 Terrabytes in a few seconds.

I have no idea why Oracle made this so difficult...their job is to provide a resource, not protect us from making a mistake. Anyway, I hope this helps some of you out.

I've really only had 1 complaint until now. Here's the full story...skip down to "The Solution" if you're impatient.

When I first helped a customer set up their backups to Oracle's Classic backup service (pre-OCI), there was a problem. The design called for the database backups to go into a different bucket for each databases. When the non-prod databases were refreshed from production, they wanted to delete the bucket that held those backups. No problem...there's a big red delete button on the bucket page, right? Wrong! You can't delete a bucket that has files in it. When you do a backup of even a medium sized database, you can have hundreds of thousands of files in it. The only way to delete files on the console is to select 100 at a time and click delete. I had 10 databases/buckets, over a million files and it took me about 5 seconds to delete 100 files. If I did nothing else...we're talking ~14 hrs of work. In reality it was going to take somebody days to delete the backups after every refresh. Completely unacceptable....

I opened an SR to ask for a better way. They pointed me to Cloudberry Explorer (which is a pretty cool product.) CBE allows you do pull up the files in Oracle's (or virtually any other) cloud in an interface similar to Window's file explorer. Great! I set it up, selected all the files I wanted to delete and clicked the little delete button. After the files were indexed, it kicked off multiple threads to get this done. 3 by 3 (about 3 sec per file), they started to delete. I left my laptop to it over the weekend. When I came back on Mon...it was still deleting them.

I updated the SR...they pointed me to using curl in batch to hit the API (Doc ID 2289491.1). Cool! I love linux scripting...it took a while to set it up, but eventually I got it to work...and one by one (about 3 sec per file) they started to delete. Argh.

A colleague I work with wrote a similar procedure in perl...but it was using the same API, and had the same results.

I updated the SR...they essentially said there's no way to do what I'm trying to do any faster.

I opened an new SR for an enhancement request to add a way to do mass deletes...here's the response:

"The expectation to delete the bucket directly is like a Self Destructive program ,Many customer will accidentally delete the container and will come back to US for recovery which is not possible."

...and then they closed my enhancement request.

My first thought was to send an email to Linus Torvalds and let him know about the terrible mistake he made introducing rm to his OS. (sarcasm) My second thought was that the company that charges for my TB's of storage usage isn't motivated to help me delete the storage.

The Solution: Lifecycle the files out.

1. First enable Service Permissions (click here).

2. In OCI, go to Object Storage, click on the bucket you want to clean up and click on "Lifecycle Policy Rules." Click on "Create Rule."

3. Put in anything for name (its going to be deleted in a minute, so this doesn't matter.) Select "Delete" from the lifecycle action and set the number of days to 1. Leave the state at the default Enabled, then click the Create button. This will immediately start deleting all the files in the bucket that are over 1 day old...in my case, that was all of them.

4. The Approximate Count for the bucket will update in a few minutes. Soon (within a min or so) it will say 0 objects. Now when you click the red Delete button to remove the bucket, it will go away. I was able to remove about 150 Terrabytes in a few seconds.

I have no idea why Oracle made this so difficult...their job is to provide a resource, not protect us from making a mistake. Anyway, I hope this helps some of you out.